Shelly Palmer

Opining about the future of AI at the recent Brilliant Minds event at Symposium Stockholm, Google Executive Chairman Eric Schmidt rejected warnings from Elon Musk and Stephen Hawking about the dangers of AI, saying, “In the case of Stephen Hawking, although a brilliant man, he’s not a computer scientist. Elon is also a brilliant man, though he too is a physicist, not a computer scientist.”

This absurd dismissal of Musk and Hawking was in response to an absurd question about “the possibility of an artificial superintelligence trying to destroy mankind in the near future.” Schmidt went on to say, “It’s a movie. The state of the earth currently does not support any of these scenarios.”

If You Ask the Wrong Question …

Hal 9000 (2001: A Space Odyssey), WOPR (War Games) and Colossus (The Forbin Project – it’s a 70’s B-budget disaster/thriller; look it up) are all pure science fiction. One day, we might reasonably ask if it is possible for sentient computers to evolve, cop an attitude and attempt to destroy mankind. But this is not the kind of AI we should fear. As Mr. Schmidt suggests, the state of the earth currently does not support any of these scenarios.

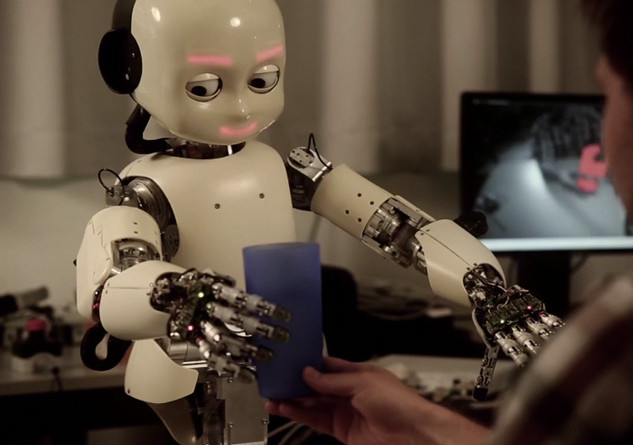

Man/Machine Partnerships

What we should fear are motivated gangs of hackers using AI to better target unsuspecting businesses and financial institutions and, ultimately, sovereign nations. According to Eugene Kaspersky, a cybersecurity expert whose eponymously named company discovered Stuxnet and Flame32, “We are living in the middle cyberage, the dark ages of cyber.”

There are many things going on today that keep me up at night. But, with all deference to Mr. Schmidt, one of the scariest is the evolution of malicious man/machine partnerships.

Hackers have always partnered with machines. They have been using bots and various kinds of viruses for years, and most cybersecurity experts will tell you it’s an arms race. On any given day, the good guys are ahead of the bad guys or vice versa. Today, the open source movement is not just for site builders and app developers. Kaspersky says that cybergangs “trade the technology to other gangs.” Scary? Yes, but manageable.

New and Scary

That said, something very new is on the horizon: man/machine partnerships pairing hackers with purpose-built AI systems. This is the type of AI training set that will empower an escalation of the cyberwarfare arms race unlike any we have seen before.

Imagine computer viruses that “think.” Would they still qualify metaphorically as viruses? Imagine a strategist that could think at the speed of Google’s AlphaGo trained to rob banks or attack medical records or whatever other computer-centric infrastructure you can think of. It won’t be a diabolical machine hell-bent on destroying the world; it will be a group of hackers partnering with powerful purpose-built computers and AI training sets attacking in ways we cannot hope to fully pre-imagine.

Will we use AI to fight AI? How will that work exactly? Will this be a symmetrical war? How would you know you were under attack? In the recent Google Deep Mind Challenge, AlphaGo, the AI system that beat a 9th Dan Devine Go Master 4 games to 1, played itself millions of times before it played a human. This contest was between an autonomous machine and a human. In the case of AI/Hacker partnerships, humans will use AI systems to attack ordinary computer systems. When we start to fight back using the same technology, it will evolve into some new, strange, iterative AI vs. AI war. It’s hard to imagine how it will end, but it’s easy to see how it could start.

AI systems don’t have feelings. They don’t know right from wrong. They only know what they are trained to do. If we train them to steal, to cheat, to disable, to destroy, that’s what they will do.

So while I am not a computer scientist and therefore, according to Mr. Schmidt, not qualified to comment about how bad guys might put good technology to work in the future, I offer this admonition. One of the very first artificial life forms created by mankind was a computer virus. We used E=mc2 to build the atomic bomb. AI is a powerful group of technologies that can, and will, do extraordinary things – both good and bad. So, let’s not fear AI. Let’s respect the technology and be prepared.